- TheTechOasis

- Posts

- Google Opens its Magic Department

Google Opens its Magic Department

🏝 TheTechOasis 🏝

Breaking down the most advanced AI systems in the world to prepare you for your future.

5-minute weekly reads.

TLDR:

AI Research of the Week: Google releases RealLife, a new Diffusion Model that Feels like Magic

Leaders: The ‘Everything will be an LLM’ Paradigm

🤯 AI Research of the week 🤯

What I’m about to show you is unprecedented and almost magic.

Google Research, in collaboration with Cornell University, has announced RealFill, an image inpainting and outpainting model with shocking results.

The model takes as a reference a set of images and allows you to fill in missing parts of a target image based on the former.

But what does that mean?

The model is capable of using the references on a handful of pictures to fill (inpaint) or expand (outpaint, the case below) while respecting the reference.

It’s capable of doing this despite the references being in other camera angles or lighting, as the model extracts the key features of those images and successfully applies them to the new generations.

This way, you don’t have to imagine how the perfect-but-cropped image should have been like.

Now, you can simply ask for it.

But let’s dive deep into how they actually created this magical model.

The 21st Century Michelangelo

Like most state-of-the-art Generative AI models today, RealLife is a diffusion model.

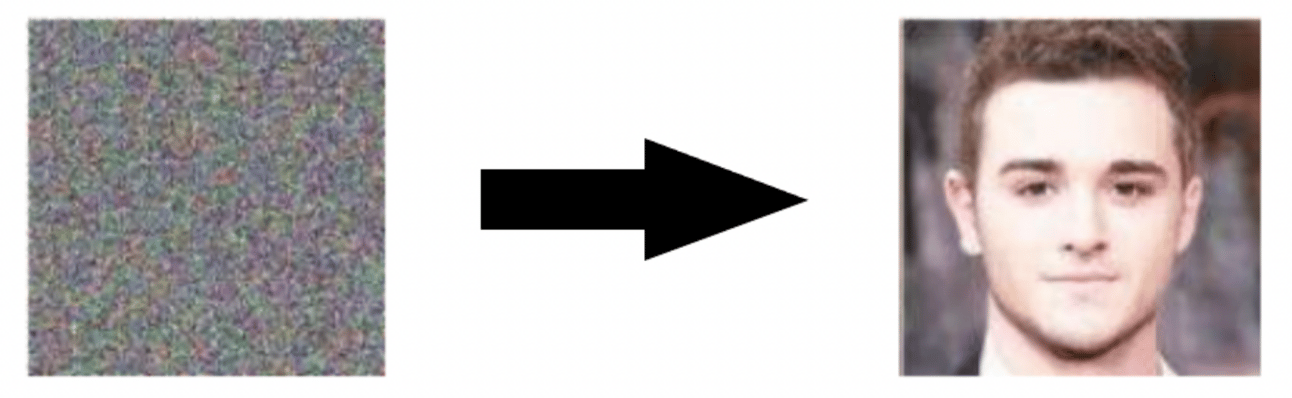

Diffusion models are AI systems that learn to transform an image in a Gaussian (random) distribution of data into a target one.

In other words, they take a ‘noised’ image, they predict the noise it has, and they take it out, uncovering the new image.

The image above portrays the training process. From a ground truth image (left), noise (random pixel values) is consistently added to the image, returning the image on the far right.

At the same time, the model takes in a condition describing the original image, such as “a cat lying down”.

Then, the model has to predict the noise, take it out, and reconstruct the initial image using the text as a ‘clue’.

Consequently, when generating new images, the model can successfully depart from an arbitrary noised ‘canvas’ and a text condition like ‘draw me a cat’ and get a new image that matches the original.

If you’re having trouble comprehending what this actually means, think about this quote from one of the most talented humans to ever work the Earth, the Rennaissance man Michelangelo:

The sculpture is already complete within the marble block. I just have to chisel away the superfluous material.

In a way, the model is doing just the same.

From a bunch of random pixels placed in an arbitrary way, the model is capable of ‘chiseling away’ the noise and uncovering the desired image:

However, not everything was going to be the bells and whistles.

In order to avoid generating the same image every time, the diffusion process always starts from random noise, thus the request “a portrait of a man” will give you slightly different results every time.

This is, in fact, done on purpose, so that the images change every time. But here, the devil lies in the details.

What if we also want to generate consistent images that are loyal to a given reference?

For instance, what if I want to extend the portrait image (right) but make sure that the newly generated part of the image respects the original outfit (left)?

Et voilà.

Just in case you’re wondering, this is NOT me.

Standard Diffusion models always give random outcomes, so the extension will never quite match the original.

With RealFill, that’s not a problem anymore.

It’s all about focusing on WHAT to learn

No matter the use case, or if it’s text, image, or sound. Neural networks, in essence, all learn the same way: by using gradient descent to optimize against a given loss function.

If we think of ChatGPT, that is minimizing the chances of not guessing the right word in a sentence. For Stable Diffusion, that is minimizing the chances of not reconstructing the initial image correctly.

Thus, it’s all about figuring out how to measure that ‘difference’ and simply tune the neurons in the network so that the difference in result is progressively smaller.

Neural networks are universal function approximators.

For a given task, they learn the set of neurons and activations that allow it to learn the function that models that task, that being predicting the next word (ChatGPT) or generating new parts of an image (RealFill).

No matter the use case, in essence the procedure is always the same.

In RealFill’s case, we need to do two things:

Teach the model to reconstruct an initial image following the Diffusion process described earlier

Teach the model to reconstruct only certain parts of an image, i.e. teach the model to inpaint or outpaint an image.

Luckily, we already have the first one, as we can simply take an open-source model like Stable Diffusion.

But for the second task, we need to fine-tune Stable Diffusion following the graph below:

We feed the model several reference images, sampling one image at a time and applying random noise to it (what they call the ‘input image’)

We also feed it a text condition, as we want to be able to determine what the model will generate in the assigned area

As we want it to be loyal to a given reference, we also feed the model the input image with a random patch pattern on top of it

Now, the objective is to reconstruct the visible parts of the patched one.

But the key intuition here is, why are we patching the image?

To succeed in a standard image reconstruction task, the model doesn’t really need to understand that the most salient feature in this image is a girl, as it will basically treat the whole image as a bunch of pixels structured in a particular way.

Thus, during training, the focus of the model is global.

But you may be thinking, ‘I disagree, as models like Stable Diffusion will generate what I ask them to, be that a girl or a gorilla, so that means the model understands what it’s generating.’

And that’s kind of true, but this will only work if the model departs from a complete ‘noised canvas’ to work on.

If you give it 80% of the image and tell it to fill in the missing 20%, that requires locality, and in those tasks, they miserably fail:

Hence, if we force the model to reconstruct only patched (partially visible) images, you are forcing it to pay attention locally.

Reconstructing patched images is the base of autoencoders or Meta’s I-JEPA world model, solutions that force models to pay attention both globally and locally to learn more complex features.

For example, if we patch half of the girl’s face, the model’s objective is no longer generating a girl dancing, it is reconstructing half of a girl's face that also happens to be dancing.

And in inference time?

Inference is just like in any diffusion model, but adding a mask so that the model generates only the missing part of the image.

Conclusively, partial reconstruction means a more complex understanding of what it has to generate and how to stay loyal to a given reference.

Magical but practical features

The best thing about RealFill is that it’s most probably already an actual, available product.

Seeing that the paper was released almost at the same time Google announced its Pixel 8 smartphone new image editing features, it’s pretty much clear this is based on RealFill.

But RealFill also has its drawbacks.

As this BBC article explains, it will be very hard to differentiate reality from fake generations, sparking a controversy regarding ‘how much AI is too much AI’.

So I wonder…

Do you think these editing features are a net positive for society or not? |

🫡 Key contributions 🫡

RealFill is the first AI image generator capable of leveraging reference images to generate plausible and loyal edited images.

It showcases the critical importance of applying locality to AI model training so that the model pays attention to what matters.

🔮 Practical implications 🔮

Google Pixel 8 smartphone most probably leverages RealFill already

Marketing and other industries will have more and more capacity to create fake but realistic images that capture attention and drive sales

👾 Best news of the week 👾

🧐 Nightshade, the ultimate tool to protect artists from AI

🧠 OpenAI’s Chief Scientist shares his views on the future of AI

🫂 A solution to hallucinations, WoodPecker

🥇 Leaders 🥇

The ‘Everything will be an LLM’ paradigm

When two of the brightest minds in the history of Deep Learning share the same opinion about something, you simply listen.

Recently, Andrew Ng, Google Brain co-founder, ex-Chief Scientist at Baidu, founder of Coursera and DeepLearning.ai and Stanford University professor, and Andrej Karpathy, co-founder of OpenAI, ex-Director of AI at Tesla, and also Stanford University professor, have shared the view of what we might call the ‘Everything will be an LLM’ paradigm.

In this world, LLMs like ChatGPT become completely omnipresent in our lives, dictating how we work, how we organize our lives, or even what we see.

In this world, these LLMs become an extension of our body, both physically and digitally, and elevate our lives to a level of comfort, security, and wisdom that no other human in history has had the opportunity to behold.

And they aren’t alone.

Meta, Microsoft, Mistral, or Adept, some of the most prominent AI companies in the world, seem to be pushing this in their own way, and we will dive in to understand how these companies want to make AI to humans almost what oxygen is.

And companies like Apple, a mere observer at this point when it comes to Generative AI, are rumored to be tracing their complete strategy toward this vision, too.

But this path isn’t free from challenges.

For that vision to become a reality, we need to improve current LLMs in terms of efficiency and performance, so we will immerse ourselves into the forefront of flagship AI research, even highlighting papers just days old, to understand how the industry is turning miles into centimeters when it comes to making LLMs improve.

Finally, we will envision how this view fits inside the greatest obsessions of companies like OpenAI, Artificial General Intelligence (AGI), to make a bold bet:

AGI might not be what you think it is.

You get an LLM, you’re friend gets an LLM, everyone gets an LLM

In a small tweet thread last week, Andrej Karpathy talked about a future where your ‘LLMs would talk to mine’.

The Exocortex and the edge

He predicted that in a not-so-distant future, everyone would have an ‘army’ of LLMs that would dictate much of what we do or see on the Internet.

For instance, he proposed ideas like using computer-level LLMs to block any unwanted ads or ads that the LLM knows are of no interest to me.

Moreover, what Andrej truly envisions is what we define as the ‘exocortex’, the hypothetical artificial external information processing system that would augment a brain's biological high-level cognitive processes.

In other words, Andrej thinks that in the near future, all of us will have LLMs acting as this ‘exocortex’ to amplify human cognition to a new level.

This could mean enhancing memory, processing speed, multitasking abilities, or even introducing entirely new cognitive functions that the human brain doesn't naturally possess.

On the other hand, Andrew Ng shares a less ‘CyberPunky’ view: The Cambrian explosion of ‘AI on the edge’.

In a recent newsletter issue of DeepLearning.ai’s The Batch, Andrew foresees a future where researchers get better and better at building hyper-performant LLMs that can be run on small devices such as your laptop or even your smartphone (this is of utmost criticality for Apple’s AI strategy, as we will discuss later).

Andrew argues that a perfect combination of LLM training improvements, high commercial interest from firms like Apple or Microsoft, and growing privacy requirements from the younger generations will cause the AI industry to transcend from huge, know-it-all models like ChatGPT into hyper-specialized smaller models that can be efficiently run in the confined security of our personal devices.

But are the industry and investors really pushing this vision forward?

The Great Question

Go big or go small? This question is becoming common among AI researchers.

On one side, we have AI giants like OpenAI or Anthropic, both actively pursuing the goal of building AGI while looking for ways to train larger and larger AI models.

On the flip side, companies like Apple or Meta seem to be pushing for Generative AI products that are small enough to be used on your phones or your laptop.

And then… there’s Microsoft. As you probably know, Microsoft is the main shareholder of OpenAI, owning almost half the company.

Thus, you may assume that their vision is clearly aligned with OpenAI. But the answer is… not really?

Yes… but no

As shared four days ago when Microsoft posted its FY24 Q1 earnings, everything seemed amazing.

Revenues were up 13%, in almost every category (including, inexplicably, an 8% increase by LinkedIn)… until we reached ‘devices’.

A 22% decrease.

As a great deal of Microsoft’s business model depends on consumer-end hardware and software, this is not great news.

Consequently, any technology that enhances the need for laptops is going to be welcomed by them, especially if we consider they are about to launch their newest super product, Copilot365.

Copilot365 is an AI agent, an LLM like ChatGPT embedded into Office 365 products like Word, Powerpoint, Teams, or Outlook, to boost productivity.

Under the hood, it’s an LLM that is connected to several APIs and has the capacity to execute actions based on user prompts.

A tooled-up ChatGPT, to be clear. Naturally, everyone just assumes that the LLM powering Copilot is ChatGPT.

But is it?

In an article a few weeks ago, it was rumored that Microsoft was scared of the huge costs coming from running huge models like GPT-4 when using them in software like Copilot that’s going to be used by millions in just a few weeks.

Consequently, they were venturing into the idea of using smaller models. A few months ago they released Orca, a 13-billion-parameter model that showed impressive performance that in many instances even surpassed GPT-4.

To train Orca, they used a process called distillation. Distillation involves teaching a student model to imitate a teacher model.

In this case, instead of training a model from scratch to predict the next word, they train a model to imitate the probability distribution of the larger model.

In layman’s terms, this smaller model learns to assign similar probabilities to the next word to what the larger model, often GPT-4, would.

This way, GPT-4’s prediction capabilities are transferred to a much smaller model that, despite being very small, is capable of making predictions only a much larger model would be capable of.

The distilled model is trained using a KL divergence objective loss, that allows it to match the probability distribution of the large model, instead of the usual cross-entropy loss you would use to train a model from scratch.

Instead of forcing a model to learn maths by seeing a lot of maths by itself, you have a teacher telling it how to answer.

However, this process isn’t perfect.

In most instances, the student model will only imitate eloquent answers, but not reasoning.

This makes sense, as if you learn by heart how to do a math problem for an exam, but if they modify certain aspects, you are incapable of solving it because you’re missing the reasoning part.

However, Microsoft took a different approach with Orca. Instead of making it learn to imitate a response, they also taught the model to reason its way into the answer.

Consequently, many assume the LLMs running behind the different Copilots being launched by Microsoft are these models like Orca or Phi-1 (the latter for code), and not ChatGPT as initially thought.

But besides distillation, another option can simply be training a model from scratch more efficiently.

Mistral and Adept lead the way

Recently, a new wave of AI models are being launched that include a handful of surprises that make them not only great models, but also quite cheap to train.

A group of Chinese researchers trained a 100-billion-parameter LLM called FLM-101B with just $100k investment, using a growth strategy where the model was progressively expanded architecture-wise, instead of training models from scratch with the desired size.

Mistral recently launched their Mistral-7B model that incorporates features like Slide-window attention, an evolution of the self-attention mechanism where words only directly attend to a specific number of previous words instead of all of them, severely decreasing the huge computational requirements of vanilla transformers.

My most recent Medium article covers Mistral in detail

Also quite recently, Adept, a top-3 Generative AI company in terms of investment only overshadowed by OpenAI and Anthropic, launched Fuyu, a small model tailored to suit white-collar activities and that is multimodal despite not having an image encoder, as shown below:

Most multimodal models for image and text processing include both an LLM and an image encoder with an adapter to project the encoder’s embeddings into the LLM’s vector space.

Fuyu proposes an alternative where the image is broken down into pixel batches and these batches treat as standard text tokens.

And a paper released just a few days ago signals that models are about to get even smaller. A team of Chinese researchers have managed to quantize a model to just 4 bits per parameter.

In layman’s terms, this means that if you have a model with 100 billion parameters at full precision (32 bits, or 4 bytes) that would mean that the model requires, just to be hosted in memory (a requirement for Transformers) 400 GB.

With 4-bit quantization, that number decreases by 8-fold to 50GB. From needing at least 6 state-of-the-art GPUs to just one.

But besides technological advances, other aspects help support the idea that this is the way to go.

And the most exciting thing is that this is probably the best way to achieve AGI.

But why?

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

Already a paying subscriber? Sign In.

A subscription gets you:

- • NO ADS

- • An additional insights email on Tuesdays

- • Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more