- TheTechOasis

- Posts

- MindEye, a new mass surveillance tool or the key to understanding the brain?

MindEye, a new mass surveillance tool or the key to understanding the brain?

🏝 TheTechOasis 🏝

It’s becoming really hard these days to find AI news not directly related to ChatGPT or Bard.

But the truth is that AI is poised to disrupt almost every aspect of our lives, including neuroscience.

Now, a recent breakthrough is giving us a glimpse of a future where minds can be read and the mysteries of some of humanity’s most unknown illnesses are unraveled.

The language of machines

At the end of the day, every AI application involves some sort of transformation.

That is, it’s all about ingesting data and transforming it into useful stuff, be that a new text sequence, or a new image.

Particularly, each model has its own particular way of understanding our world.

Its own “latent space”.

For this, models encapsulate their gained knowledge into vectors, forming an abstract, numerical representation of what our world means to them, grouping vectors that are similar, and separating those that aren’t.

If we were capable of drawing this (we can’t, these vectors have +500 dimensions), it would look something like this:

The problem is that each model has its own “latent space” and most times isn’t comparable (a “dog” in ChatGPT’s world is different from Bard’s).

Consequently, a great deal of AI research these days is focused on bridging these worlds between models to achieve “multimodality”, models that can process data in different forms like text or image, and map them into the same latent space.

In layman’s terms, multimodal models are capable of understanding that a picture of a dog and an image of a dog refer to the same thing.

Now, a stacked team of researchers from Princeton, Stability AI, among others have achieved an incredible milestone, mapping fMRI scans into image vector spaces.

Put simply, AI can now read your mind, and you’re about to understand why.

Bridging brain scans and images

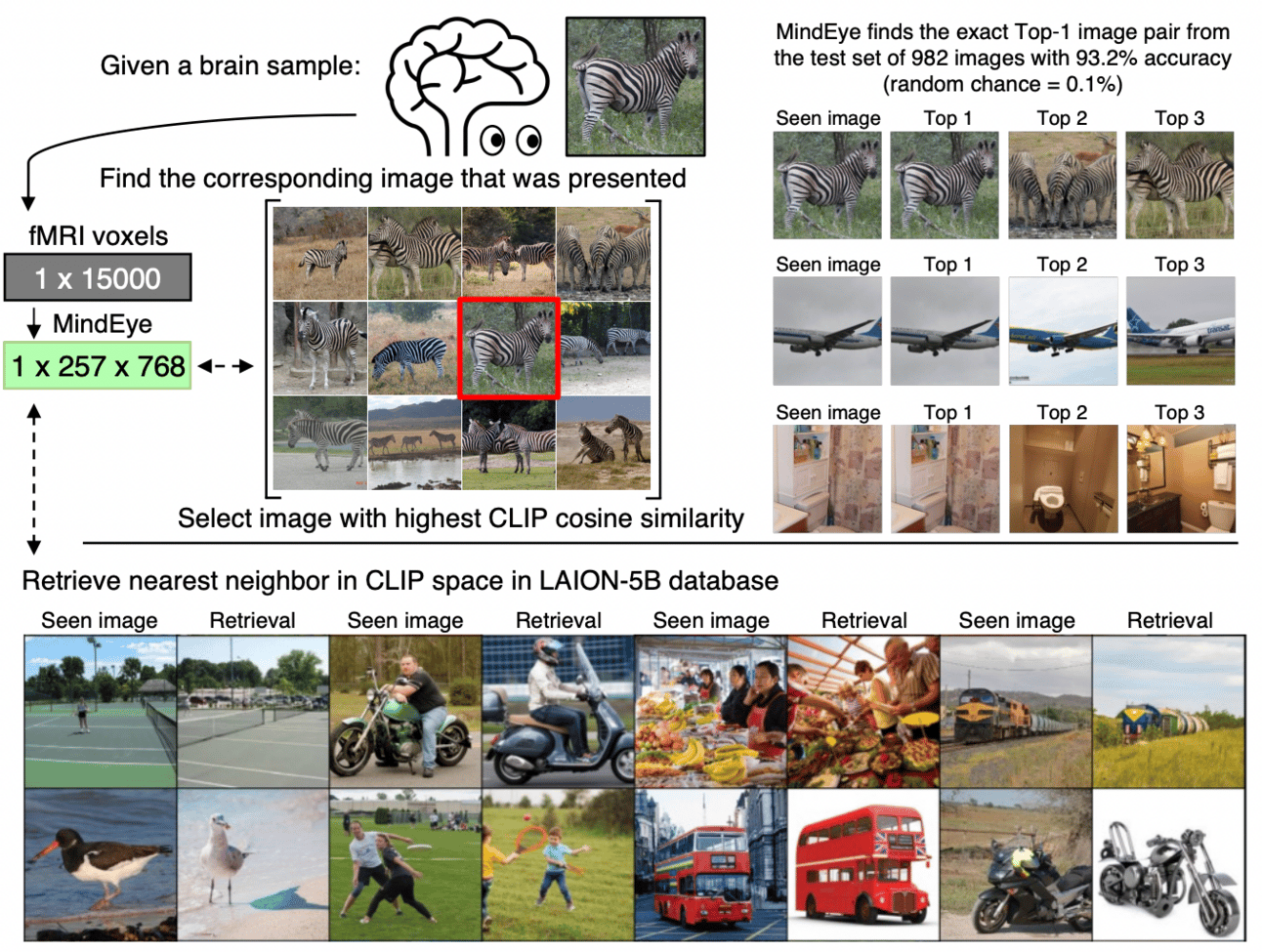

In very simple terms, Mind’s Eye is a new AI model that it’s capable of two things:

Image reconstruction

Image retrieval

And the impressive thing is that the model can do both by simply looking at fMRI scans… without actually seeing the original image.

For instance, MindEye is capable of recreating images that highly resemble the original image seen by the human subject.

Regarding image retrieval, the results are equally impressive:

Seems like alien technology, but how does Mind’s Eye work?

Mapping the worlds of fMRI and Images

By looking at the image below, Mind’s Eye looks hella more complicated than it actually is.

It’s composed of two different pipelines:

A high-level pipeline optimized to achieve highly semantic image retrievals and recreations

A low-level pipeline optimized to achieve accuracy in low-level metrics like color, texture, or spatial position

In layperson terms, if the original image was a “man surfing a wave”, the former ensures that the outputted image represents that, and the latter ensures that the structure of the image, like the position of the surfer, is respected.

To create this remarkable solution, MindEye is formed by six components:

CLIP encoder

MLP backbone

MLP projector

Stable Diffusion autoencoder

Diffusion prior

Image generation decoder

Firstly, the CLIP encoder simply encodes (transforms) the original images seen by the patient whose brain was scanned, into CLIP’s latent space.

Then, the MLP backbone will transform the brain’s fMRI voxels (3-dimensional cubes of cortical tissue) into a vector embedding too. This backbone is used both for image retrieval and recreation.

Image retrieval

An MLP projector is used to map the backbone’s vector into CLIP’s latent space too.

Put simply, the original image and the brain scans resulting from a human seeing that image will have almost identical vectors positioned in the same vector space, thus allowing MindEye to retrieve the exact image from the dataset.

Image recreation

In parallel, a diffusion prior transforms the backbone’s vector into a new vector that can be used to generate a novel image that should resemble the original one.

Simultaneously, in the low-level pipeline, the backbone’s vector is also inserted into Stable Diffusion and used to generate a noisy representation of the original image (the blurred illustration in the previous image).

This isn’t completely necessary but plays a fundamental role.

For any diffusion model like MidJourney or Stable Diffusion, the model departs from a noisy image and builds a new one following a diffusion process that transforms the noisy image into a new one.

Diffusion process (from right to left)

This process usually starts from a random noised image, but if we’re capable of departing from a better position, the chances of achieving a high-quality result increase.

Put simply, it’s easier to draw a cat if the cat’s figure has been previously drawn. Therefore, the output of the low-level pipeline is used as guidance.

Finally, the resulting embedding from the diffusion prior is inserted into an image generation model that generates the image.

Et voilà.

After seeing this, one is too tempted to ask the question:

Has humanity built the first global mind reader?

Fortunately, not quite... yet.

Mass surveillance or curing depression?

Of course, the first thing that comes to mind is mass surveillance.

Will governments use MindEye to track our thoughts?

Is this the end of freedom of mind?

Well, no.

Although MindEye is unequivocally a step in that direction, today’s “mind readers” are easily fought against.

The model clearly fails when the human tries to avoid the mind reading, and fMRI scans are highly human-specific, meaning that MindEye used on humans whose brains haven’t been scanned won’t work that well.

On the flip side, MindEye has huge potential to help us humans understand how the brain and its associated illnesses work.

For instance, we could reconstruct images from a person with severe depression, gaining insights into how the illness affects the brain.

We could also study people in a state of pseudocoma, and build images of what they are thinking about when they are in that state.

AI moves fast

With MindEye, we’re one step closer to understanding our own brains.

As AI breaks down the limits of what humans can achieve, it also opens a world of possibilities for those willing to leverage it.

AI is moving fast, so what are you going to do about it?

Key AI concepts you’ve learned today:

- How to build a mind-reading machine

- How scientists are trying to understand the human brain

- The concept of a model’s latent space

👾Top AI news for the week👾

😍 Watch man regain feeling and movement thanks to AI

🤥 OpenAI shuts down its AI detector for its “low accuracy”

🕹 Last week we talked about LLaMa, now try it here!

🥸 Microsoft, Google, OpenAI, and Anthropic create the Frontier Model Forum